Published:

Tuesday, May 26, 2020

How would you feel if you discovered that invisible technologies are making critical decisions about your health care, housing, education, loans, and other essential parts of your life? How would you feel if you found out that these technologies often make errors, denying people access to essential services, and even when accurate, can exacerbate societal inequities?

When we realized that such AI-based technologies, or automated decision systems, were being widely adopted and used by government agencies inside and outside of Washington with no public oversight, we were alarmed. These automated decision systems are fundamentally reshaping the way government agencies and other institutions make decisions in domains including criminal justice, health care, and employment. To highlight just a few examples: whether or not you get benefits like food or rental assistance, whether you go to jail and for how long, and whether you get hired may all be decided by unaccountable and non-transparent computer technology.

It is incredibly difficult for most people to evaluate these technologies. Because they are often invisible and not subject to public oversight, it is especially hard to measure if and to what extent they make inaccurate and biased decisions that harm those most vulnerable.

Given these pressing concerns, the ACLU of Washington began working with partners on two key initiatives:

We realized that in order to build capacity for robust public oversight, we needed resources that could provide guidance on how to identify automated decision systems, what issues to look for, and what questions to pose to policymakers.

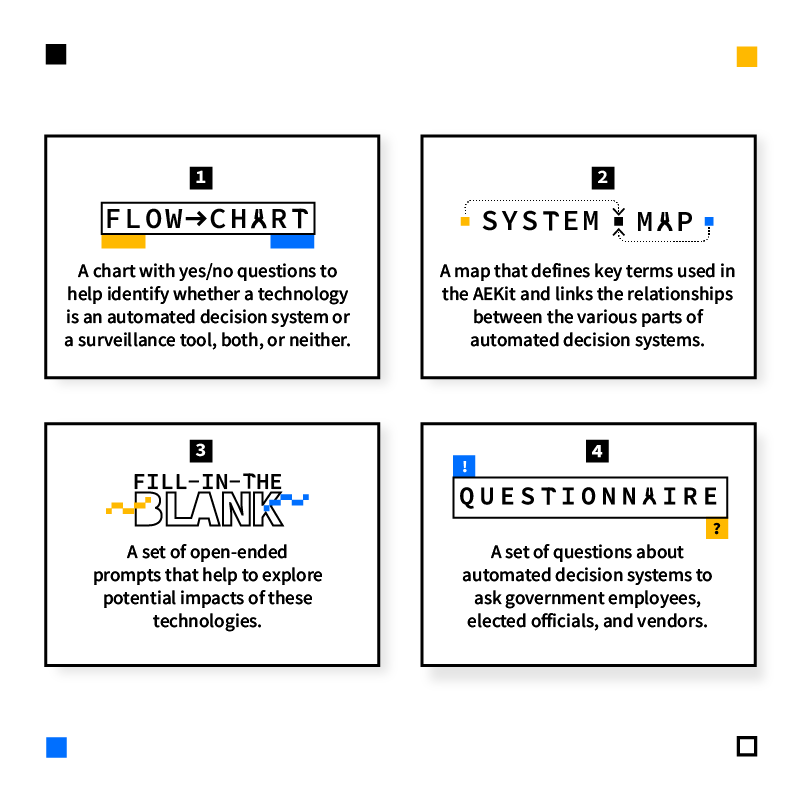

After a year of collaboration we’ve created a toolkit that does just that. The AEKit is designed to support community groups in examining public sector surveillance and automated decision systems. It consists of four key components: a flow chart for categorizing technologies as using or not using surveillance and automated decision systems, a map for understanding key components of these systems, a fill-in-the-blank worksheet for separating intended uses from what could go wrong, and a list of crucial questions for groups to pose to public officials in interrogating new technologies.

We hope that these materials will be helpful to organizations advocating for community input on technologies being adopted. We welcome your remixes, changes, use, and reuse. Please reach out to us at ACLU WA and the Critical Platform Studies Group with the work you are doing, and any ways you have decided to use these resources.

When we realized that such AI-based technologies, or automated decision systems, were being widely adopted and used by government agencies inside and outside of Washington with no public oversight, we were alarmed. These automated decision systems are fundamentally reshaping the way government agencies and other institutions make decisions in domains including criminal justice, health care, and employment. To highlight just a few examples: whether or not you get benefits like food or rental assistance, whether you go to jail and for how long, and whether you get hired may all be decided by unaccountable and non-transparent computer technology.

It is incredibly difficult for most people to evaluate these technologies. Because they are often invisible and not subject to public oversight, it is especially hard to measure if and to what extent they make inaccurate and biased decisions that harm those most vulnerable.

Given these pressing concerns, the ACLU of Washington began working with partners on two key initiatives:

- Legislation that would require public oversight for any automated decision system being acquired or used by government;

- Resources to increase the capacity of the public to identify and raise concerns about automated decision systems.

We realized that in order to build capacity for robust public oversight, we needed resources that could provide guidance on how to identify automated decision systems, what issues to look for, and what questions to pose to policymakers.

After a year of collaboration we’ve created a toolkit that does just that. The AEKit is designed to support community groups in examining public sector surveillance and automated decision systems. It consists of four key components: a flow chart for categorizing technologies as using or not using surveillance and automated decision systems, a map for understanding key components of these systems, a fill-in-the-blank worksheet for separating intended uses from what could go wrong, and a list of crucial questions for groups to pose to public officials in interrogating new technologies.

We hope that these materials will be helpful to organizations advocating for community input on technologies being adopted. We welcome your remixes, changes, use, and reuse. Please reach out to us at ACLU WA and the Critical Platform Studies Group with the work you are doing, and any ways you have decided to use these resources.